Under support from NSF and from MIT, we have made considerable progress in each of our main thrust areas – learning, assessment, and tutoring – since 2000. Our studies imply that, of the various instructional elements in the course, electronic tutorial – type homework generates by far the most student learning as displayed by score improvement on the MIT final and is comparable to group problems on standard tests of conceptual understanding. We have developed extraordinarily accurate assessment based on the process of a student working through a tutorial. Although integrated seamlessly within the instructional activities, it has the power to assess student’s skills on a fine grid of topics, allowing targeted tutoring to improve students’ scores as well as prediction of students’ performances on high stakes tests. Finally, we have developed techniques to measure the learning from individual tutorials. For example, our recently developed ability to accurately measure the amount learned per unit of student time on a single tutorial allows comparison and improvement at the micro level. This will allow us to improve tutoring both by improving the individual tutorials and by determining what pedagogy (e.g. tutorial-first vs. problem-first) works best. We have also found evidence for the effectiveness of hints in arriving at the correct solutions. Further studies on this will help improve our hint structure and would increase their effectiveness in tutoring.

Learning

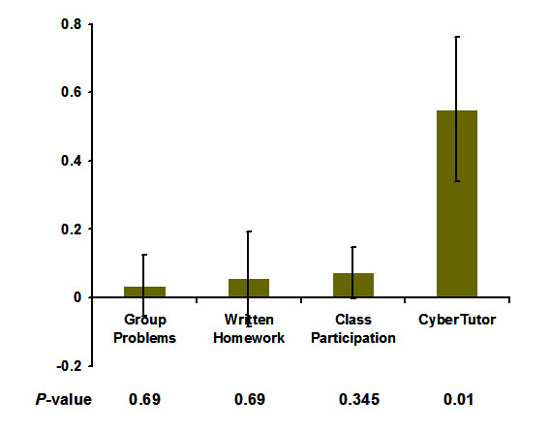

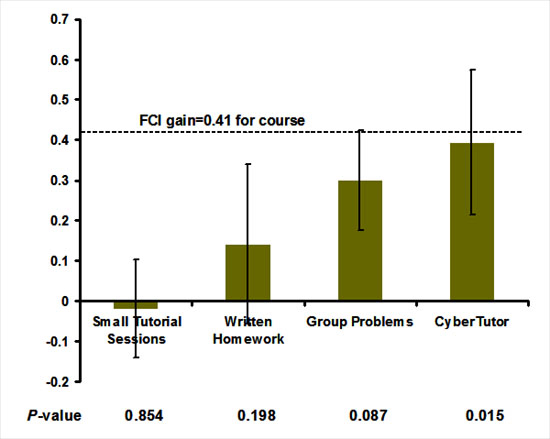

We have measured the gain in test scores on traditional final exams as well as more conceptually oriented standard assessment instruments in an introductory physics course in Newtonian mechanics. (The gain is the fractional reduction in wrong answers between a test given before the course and one given afterwards.) Correlating this gain with various instructional elements in the course showed that the use of electronic tutorial type homework correlated with gain on the MIT final examinations and that it and collaborative solving of problems in class correlated with gain on standard tests of conceptual understanding.

Students who do more myCyberTutor electronic homework show significant (p = 0.01) gain on the MIT final exam taken in Spring 2001 relative to the one taken in Fall 2000. No other instructional element in the course correlates significantly with improvement on these tests.

(see What course elements correlate with improvement on tests in introductory Newtonian mechanics? – Morote, E. S., Pritchard, D.: ERIC #ED463979).

Electronic homework and cooperative problem solving correlate significantly with improvement on after vs. before testing with the Force Concept Inventory, a test of conceptual knowledge in Newtonian mechanics. Data compiled by Prof. Craig Ogilvie, 8.01 Spring 2000. (see What course elements correlate with improvement on tests in introductory Newtonian mechanics? – Morote, E. S., Pritchard, D.: ERIC #ED463979).

Assessment

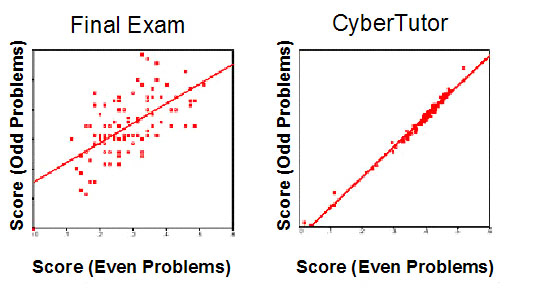

We have developed an assessment that has ~ 100 times less testing error than traditional final examination and has the added advantage of being integrated within the tutoring process. It is based on assessing the process of the student working through an interactive problem and not just the correctness of his/her final response. The reduced testing error allows the reliable determination of the student’s skill on a large number of sub-topics within the curriculum without the necessity of distracting tests. These assessments are based on variables that are available only within an online tutorial environment (or direct student-teacher interaction), including the number of hints, wrong answers, and solutions requested, and the time to completion – none of this information is available within traditional testing environments. The powerful insight from this detailed assessment allows the prediction of the scores on standard tests with a predictive validity coefficient of 0.77. This enables students to monitor their progress on the scale of the test they are studying for, and it allows teachers to raise the scores of their classes on standard high stakes tests.

Testing error is determined by dividing the test (or the tutoring problems) into two equivalent subtests (e.g. even and odd numbered problems). A highly reliable instrument would measure the same score on each equivalent subtest, and the points would lie on the diagonal. The reliability of the final exam is 85% (i.e. 15% of the observed variance is due to testing error) whereas the electronic homework had a reliability of 99.76% (i.e. only 0.24% of the observed variance is due to testing error) – Data from MIT course 8.01 in Spring 2001.

(see Reliable assessment with CyberTutor, a web-based homework tutor – Pritchard, D., Morote, E. S.).

Tutoring

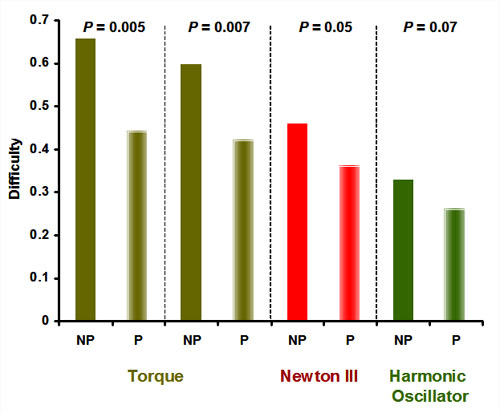

Using a commercial tutoring program called myCyberTUTOR (from effedtech.com), together with the assessment algorithms developed through our research, we have studied the effectiveness of tutorial type problems in helping students work through subsequently given homework-type problems (which also had embedded help). In a class of 80 students, we observed strongly significant improvement due to a single tutorial. In a larger class it would be possible to compare the effectiveness of alternate versions of a tutorial on a particular topic, thus allowing the improvement of individual tutorials.

As a result of working through a preparatory tutorial, the group “Prep” (P) has significantly less difficulty on each of several homework problems than the equally skilled group “Non-Prep” (NP) that did not do the tutorial first. The ability to measure the learning due to a single tutorial is unique and will allow the improvement of such tutorials

(Effectiveness of tutorials in web-based physics tutor – Morote, E. S., Warnakulasooriya, R., Pritchard, D. : in preparation).

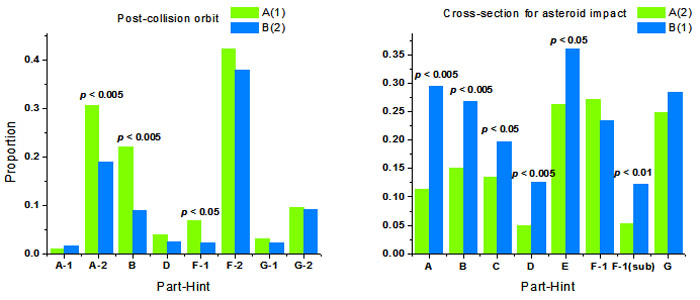

We also find evidence for the effectiveness of hints. Administering related problem pairs to two equally skilled groups in different orders we find that the group which solves a problem in a given problem-pair second requests 12% fewer hints than the group which solves it first. Also, the groups benefits from the hints in the first problem in answering the second problem in the pair. The maximum reduction (19%) in requests for hints occurs for a problem after solving its related tutorial problem. These findings support the cognitive theory that feedback is a form of information that helps students in learning. Further studies on this will help improve our hint structure and would increase their effectiveness in tutoring.

In “post-collision orbit” group A which solves it first requests more hints and requests less hints in its related problem “cross-section for asteroid impact” which is solved second. The same conclusions hold for group B for “post-collision orbit” having solved “cross-section for asteroid impact” first. The proportion is the fraction of students using a particular hint as the last hint before submitting the correct solution relative to the total number of students giving the correct solution to the main part of interest.

(see Hints really help!– Warnakulasooriya, R., Pritchard, D.).

Future Objectives

Over the next few years, we hope to learn how to measure reliably the skill of the students on a fine grid of topics. This will enable us to tutor individual students on their specific areas of weakness. It will help us address student difficulties in physics caused by poor preparation in foundational skills. A longer term goal is to enable the tutor to address each student in the learning style in which that students learn best – preliminary data show that we can easily measure the problem solving style of our students and we expect future research to reveal learning styles as well.

Comments